In the vast universe of online communities, user-generated content has become the backbone of engagement. Every type of content, from reviews to comments, is pivotal in shaping the digital experience.

However, the moderation process becomes paramount with this surge in human content.

Why?

Because content moderation is vital to ensure that harmful content, which might tarnish the essence of these platforms, is kept at bay.

The moderation team, equipped with advanced tools, is the unsung hero in this narrative. Their task isn't just about removing content but preserving the platform's integrity, making it a haven for genuine interactions.

Understanding the nuances of content moderation is essential for those in social media marketing. It's not just about promoting a brand; it's about fostering a community where every voice is heard, but harmful noise is filtered out.

Content moderation goes beyond just removing inappropriate content. It's about creating a safe, inclusive, and positive online environment for all users.

As we navigate this digital age, it's clear: to maintain the sanctity of our cherished online spaces, the role of language detection in content moderation and filtering cannot be overlooked.

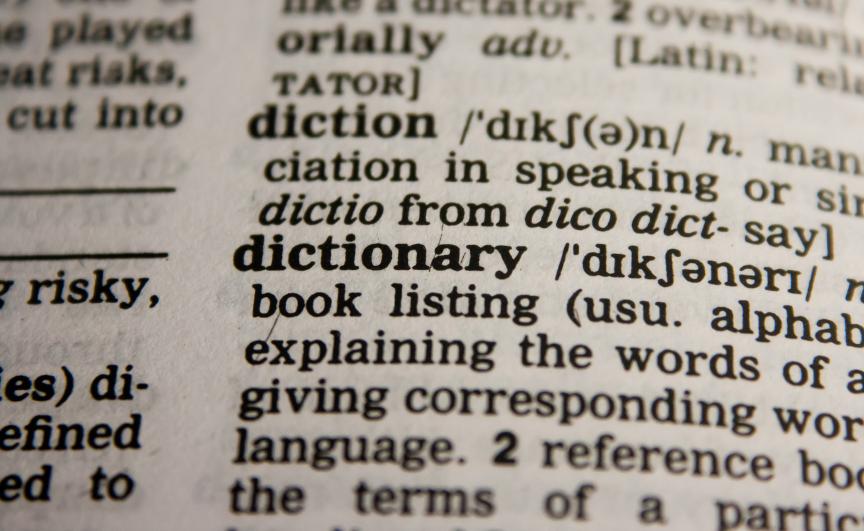

Content moderation definition:

Let's get started with a clear and concise definition of what content moderation is - and is not:

Content moderation is the process by which digital platforms review and assess user-generated submissions before allowing the content to be published. Creating a safe, respectful, and inclusive online environment is the heart of the content moderation process.

Content moderation helps platforms sift through vast data, ensuring that harmful or inappropriate material doesn't see the light of day.

Moderators look for content that could be offensive, misleading, or violate platform guidelines. For instance, a content moderator might review a user's post on a social media platform, checking for hate speech, misinformation, or graphic imagery.

If such elements are found, the post will be flagged or removed, ensuring the platform remains a trusted space for its users.

However, content moderation isn't about stifling freedom of expression or curating an echo chamber. It's not an approach to moderation that seeks to suppress differing opinions or healthy debates.

For example, a user might post a political opinion different from the majority. While some might disagree with the viewpoint, a content moderator would not remove it simply because it's a minority opinion as long as it adheres to the platform's guidelines and doesn't incite harm or spread falsehoods.

Enter the Content Moderator

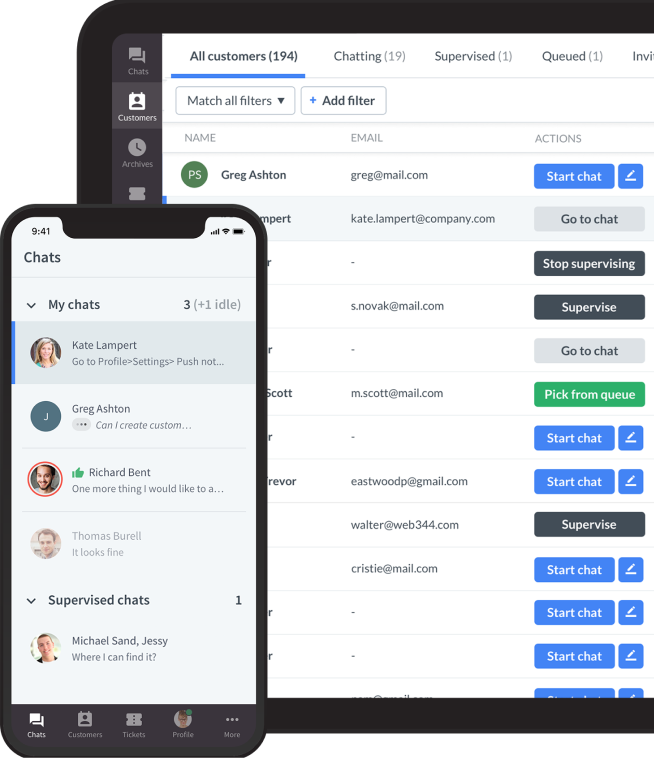

A content moderator is tasked with overseeing user-generated content across various online platforms.

Their primary role is to review the content, ensuring it aligns with platform guidelines and community standards. Human content moderators often employ a combination of manual checks and automated tools to streamline their content moderation work. Experienced content moderation requires a keen eye and understanding of the platform's ethos.

Content moderators also frequent online communities, forums, and social media to monitor discussions and user interactions. They represent the platform's commitment to maintaining a safe and respectful digital environment.

In their arsenal of tools, language detection aids significantly, allowing them to categorise and review content in multiple languages efficiently.

What is Language Detection?

At its essence, language detection identifies the language of a given piece of content.

Pinpointing the language becomes paramount in an era where content is produced and consumed in real time. This process ensures that the content submitted is directed to the right audience and aligns with the platform's content moderation policies.

For instance, an advertisement intended for a French audience might be ineffective or even misunderstood if presented to an English-speaking audience without proper translation or adaptation.

The Role of Language Detection in Content Moderation and Filtering

As the digital realm burgeons with diverse content, the need for a practical content moderation approach intensifies. Human moderators, skilled as they might be, face the Herculean task of sifting through vast content.

Here, language detection proves invaluable. Categorising content based on its language facilitates quicker and more accurate moderation decisions. For example, content deemed acceptable in one culture could be inappropriate in another.

Detecting the language ensures that such content is reviewed by moderators familiar with the cultural and linguistic nuances, promoting trust and safety.

In essence, language detection isn't merely a technical tool but a cornerstone of maintaining online community integrity.

The Importance of Language Detection in Today's Digital Age

The digital age has transformed the world into a global village.

As geographical boundaries blur, the diversity in online content multiplies. In such a landscape, language detection plays a pivotal role. It ensures that users are presented with content they can comprehend, elevating their digital experience.

Consider a multinational platform where users from different countries contribute. Without effective language detection, the platform would be a cacophony of languages, potentially alienating users.

Platforms can foster stronger global connections by ensuring content is presented in a familiar language.

How Language Detection Works

Beyond mere word recognition, language detection delves deep into the intricacies of language.

Leveraging advanced algorithms and machine learning, it examines linguistic patterns, grammar, and context. An example of content that showcases its prowess is a post that seamlessly blends English and Spanish.

A rudimentary system might falter, but with sophisticated models, such mixed-language content can be accurately identified, ensuring it aligns with the platform's moderation guidelines and reaches the intended audience.

Challenges in Language Detection

Language detection for content moderation faces several challenges.

The sheer volume of content online demands a robust moderation system capable of swift and accurate decisions. However, languages evolve, with slang, dialects, and code-switching often blurring linguistic boundaries. Such nuances can confound even sophisticated systems, leading to misclassifications.

Furthermore, a purely reactive moderation approach, responding only after flagging content, can be less effective in real-time scenarios.

The dynamic nature of language and the vastness of digital content underscores the complexities in ensuring precise language detection for content moderation and filtering.

The Basics of Content Moderation

Content moderation involves reviewing and monitoring online content to adhere to platform-specific guidelines and standards.

From protecting brand reputation to ensuring user safety, content moderation plays a pivotal role in maintaining the integrity of online platforms.

Language detection is the first line of defence. By identifying the language, moderators can route content to experts fluent in that language, ensuring accurate and culturally sensitive moderation.

Filtering Content: Beyond Just Moderation

In the digital age, users are inundated with a plethora of content.

Filtering becomes essential as a subsequent step to moderation and a means to curate a user's online experience.

For instance, while moderation ensures no harmful video content is uploaded on a video streaming platform, filtering ensures users see content tailored to their viewing history and preferences.

The Concept of Content Filtering

Content filtering is more than just an automation tool; it's a sophisticated system that utilises algorithms to sift through vast amounts of data.

For example, social media content moderation might focus on removing harmful posts, but filtering determines which posts appear on a user's feed first. It considers user preferences, interactions, platform guidelines, and even trending topics to curate a feed that resonates with the user.

How Filtering Differs from Moderation

Moderation and filtering, though interconnected, serve different purposes. Effective content moderation is a gatekeeper, ensuring content aligns with platform standards. For instance, a post promoting hate speech would be removed during moderation.

Filtering, on the other hand, is about curation. It ensures that users are not just protected from inappropriate content but are also engaged.

For example, a user interested in travel would see more travel-related posts on their social media feed due to effective filtering.

The Role of Language Detection in Filtering

Language detection plays a pivotal role in enhancing the user experience through filtering.

Consider a global platform with diverse user demographics. Detecting the language ensures that content moderation solutions are not just about safety but also about relevance.

If a user primarily interacts in Spanish, detecting this preference means they're more likely to receive content in Spanish, ensuring a personalised and satisfying online experience.

The Technical Side of Language Detection

Delving into the realm of content moderation, it's evident that the backbone of effective systems lies in the precision of language detection. This technical prowess is not just about identifying words but understanding the nuances of language, making it possible to moderate content efficiently.

Algorithms and Machine Learning in Language Detection

The bedrock of modern language detection tools combines advanced algorithms and machine learning.

For instance, when monitoring user-generated content on a global platform, automated moderation systems rely on these algorithms to swiftly identify the language of the content. Trained on extensive datasets, these systems can differentiate between dialects, slang, and regional variations with remarkable accuracy.

This ensures that human moderation teams can focus on content that requires a nuanced approach, such as discerning harmful or illegal content from genuine posts.

The Evolution of Language Detection Tools

The progression of language detection tools mirrors the broader trajectory of technological evolution. Early methods, reliant on dictionary-based lookups, were limited in scope and accuracy.

Today, AI-driven models, informed by vast amounts of data, can detect the language in real time, even in mixed-language content.

For example, a post blending English and French can be accurately identified, aiding content moderation systems in their task.

Limitations and Room for Improvement

Yet, despite these advancements, challenges persist. Some dialects or regional vernaculars can confound even the most advanced systems. Additionally, the dynamic nature of language, with new slang and phrases emerging continually, means that systems need constant updates.

Recognising these limitations is not a sign of defeat but an acknowledgement that content moderation is essential and ever-evolving. As technology advances, there's optimism that the gaps in language detection will narrow, further bolstering the efficacy of content moderation.

Real-world Applications

The practical implications of language detection are vast, actively moulding how we interact with online content. From filtering out offensive content to tailoring user experiences, the real-world applications of this technology are manifold.

Social Media Platforms and Language Detection

Social media platforms are rife with user-generated content, spanning myriad languages. Consider a user in Spain posting an update and a friend in Japan wanting to understand it.

Language detection tools identify the content's language, offering translation options. Moreover, these platforms use this technology to suggest content, ensuring users receive posts in languages they're comfortable with.

An example is Facebook's automatic translation feature, which relies on advanced language detection to provide translations of user-generated content on online platforms.

E-commerce and Review Filtering

Imagine browsing an international e-commerce site and coming across a product with hundreds of reviews. Language detection ensures that you, an English speaker, see reviews in English first, enhancing your shopping experience.

For instance, Amazon uses language detection to filter and categorise reviews, ensuring that users can easily find feedback relevant to them, thereby streamlining the type of content moderation needed.

News Websites and Comment Moderation

News websites are hotspots for vibrant discussions.

However, they're also susceptible to spam and inappropriate comments. Language detection aids the content moderation team identify the language of comments, ensuring that they're directed to the appropriate moderation method.

For instance, a comment in French on an English news article might be flagged for review. Human content moderation then reviews and moderates user-generated content to ensure that discussions remain constructive.

The BBC, for example, employs a combination of automated and human content moderation to manage the vast volume of comments on their site, ensuring that discussions are relevant and respectful.

Automated Content Moderation

Automated content moderation is the frontline defence against inappropriate or harmful content on digital platforms. By leveraging algorithms and machine learning, platforms can swiftly scan vast amounts of user-generated content, flagging potential issues.

For instance, YouTube employs an automated system that detects and removes content violating its guidelines even before users view it. However, while automation enhances efficiency, it's not infallible.

Misclassifications can occur, underscoring the need for a human touch in moderation.

Common Content Moderation Tools

Several tools aid in the content moderation process, each tailored to specific needs. For text-based content, tools like CleanSpeak filter out profanity and hate speech.

For image and video content, platforms might employ tools like Google's Cloud Vision API, which detects inappropriate visuals. Additionally, platforms like Facebook utilise bespoke tools that combine machine learning and user feedback to monitor content.

These tools, while diverse, share a common goal: ensuring online spaces are safe, respectful, and adhere to platform guidelines.

The Future of Language Detection in Content Moderation

The realm of language detection in content moderation is poised for transformative advancements.

As we stand at the cusp of technological evolution, integrating AI and machine learning promises to redefine our current systems. For instance, platforms like Twitter inundated with millions of tweets daily, will benefit from enhanced tools that can swiftly and accurately moderate content in multiple languages, ensuring a safer user experience.

The Role of AI and Machine Learning

The infusion of AI into language detection is not merely about identifying languages but delving deeper into linguistic intricacies.

Advanced models are being trained to discern context, detect sarcasm, and even gauge the emotion behind a text.

A practical example is Google's BERT, which understands the context of words in search queries, ensuring more relevant results. Such advancements will be pivotal in moderating content that might be ambiguous or context-dependent.

Ethical Considerations

The marriage of technology and ethics is non-negotiable.

As language detection tools become more sophisticated, there's a pressing need to address potential biases. For instance, ensuring that lesser-known languages aren't sidelined in favour of dominant ones is crucial. Moreover, respecting user privacy becomes paramount as AI models delve into understanding user sentiment and emotion.

Platforms must strike a balance, ensuring that while content is moderated for safety, users' rights and cultural nuances are upheld.

Conclusion

The digital universe thrives on user-generated content.

As this content shapes our online experiences, the imperative of effective moderation becomes undeniable. Language detection, an unsung hero, stands at the forefront of this endeavour.

It's a tool and a guardian, ensuring content aligns with platform ethos and user expectations.

The journey ahead promises innovation, but the goal remains unchanged: a safe, inclusive, and respectful digital community for all.